This page aims to address most of your queries with regards to Nutanix Collector. While we are happy to engage in insightful conversations over the slack channel, we request you to please go through the FAQs on this page before reaching out to us via slack or email.

How can my prospect or customer access Collector?

Prospects and Customers can access Collector in a few ways:

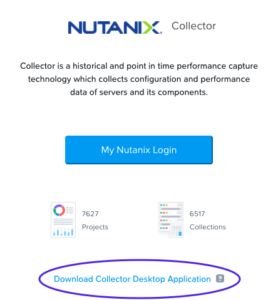

Download Collector via Collector Login Page as seen in the screenshot below:

Collector Public Download Link – no registration required

Collector Download Link for MyNutanix Users

The last approach via MyNutanix registration would also give our users access to the Collector Portal.

How do we report issues in Collector?

You can report issues in Collector by filling this Google form. The form can also be accessed by customers, prospects, and partners.

Why should my customer or prospect use Collector Portal?

Replicates the exact same view as seen by your prospect or customer

Share and Collaborate with peers, customers/prospects, capacity planning experts for improved sizing.

Collector Portal is enhanced on a regular basis to add more value – for example, VM provisioning status, VM list tab with the consolidated view, etc.

Data gathered by Collector across 200K+ VMs says 90+% of VMs are over-provisioned

How can I view the Collector output generated by my customer or prospect?

Collector contains data gathered from the customer or prospect data centers and hence the data gathered by customer or prospect is not accessible by anyone unless the data is explicitly shared.

How can my customer or prospect share the data gathered by Collector with me?

There are a few ways to request data from your customer or prospect:

- Request the Collection file (zip file) and create a project in the Collector portal to replicate the exact same view as your customer or prospect. You can also generate an XLSX file once the project is created.

- If the customer or prospect has already created a project in Collector Portal, you can request them to use the “Share” option to share the project with you. For more details on sharing projects, please refer to the User Guide.

- Request the XLSX file generated by Collector, the XLSX file can be used to analyze the data and import the data into Sizer but XLSX can’t be used to replicate the visual views in Collector Portal.

How can I create a “Project” in the Collector Portal?

You can create a Project using the Collection zip file. For detailed steps, please refer to “Creating a Project” in the Collector Portal User Guide available here

How can I invite new users to Collector Portal?

We have simplified the process of inviting users to the Collector Portal. If you have an existing project which you want to share, just go ahead and share the project with them. If the user is not registered on Collector Portal, we will identify the same and then invite the user and share the project via 1-click.

If you want to invite users without sharing any projects, please use the “Invite” option present within the top right section under the “Summary” page of Collector. For more details, please refer Collector Portal User Guide available here

Where can I find the documents associated with Collector?

Please refer to this Portal link for User Guide, Release Notes, and Security Guide.

Is there any Collateral that I can share with my prospect or customer to brief them about Collector?

We have a flyer that is currently a work in progress – “Capacity Planning Data Collection in 30 seconds”. We will update this FAQ once the collateral is ready. Draft version available now – Collector in 30 seconds

Does the data gathered by Collector include CVM resources?

In the case of vCenter/ESXi or Hyper-V, the data includes CVM resources. Please ensure to turn off the CVMs before sizing to ignore the resources consumed by CVM.

In the case of Prism/AHV, the data does not include CVM resources as these are not needed for sizing. At the same time, we plan to enhance Prism/AHV to optionally show resources along with CVM.

Sizing FAQs

Can I trace back a solution back to the requirements gathered by the Collector?

Yes, if you happened to use Collector Portal and exported to Sizer. Recently, we have introduced the ability to view all the related sizings associated with the Collector Project. This mapping would be extremely beneficial in case of any customer satisfaction issues where the workload has changed pre and post-deployment.

Why are the Processor selection options disabled when I export the Collector output to Sizer?

Collector automatically considers the CPU based on the data gathered from the existing customer or prospect environment and hence the processor selection options are disabled. In the future, we plan to enhance UI to make this more intuitive.

How can I selectively turn off or on certain VMs before exporting the data to Sizer?

There are a couple of ways in which you can selectively turn off or on certain VMs before sizing via exported XLSX file.

If you using XLSX export in Sizer and want to power OFF a few VMs, you need to make a couple of changes:

a) In the “vInfo” sheet, edit the ‘Power State’ column value from “poweredOn” to “poweredOff”.

b) In the “vCPU” sheet, clear the values of the mentioned columns for the VMs that you desire to turn off – ‘Peak %’, ‘Average %’, ‘Median %’, ‘Custom Percentile %’ and ’95th Percentile % (recommended)’

Save the sheet and import data in Sizer.

Note: If you miss (b) you may see the below error:

Data being imported contains one or more VMs that were powered ON during the collection period and reported CPU utilization.

We recommend you to size both Powered ON & Powered OFF VMs.

How can I selectively decide to place VMs in different clusters when sizing?

Similar to turning off/on the VMs, you can selectively specify the target cluster before sizing.

If you are using Collector Portal, you can go to the “VM List” tab and edit the ‘Target Cluster’ field before exporting the data to Sizer.

If you using XLSX export, you can edit the ‘Target Cluster’ column under the “vInfo” sheet and save it before importing data in Sizer.

You can also edit multiple VMs in one go via “Bulk Change” option.

Can we export more than one Collector Project to the same Sizer scenario?

Unfortunately, this is not possible via Collector Portal but you can do it directly in Sizer using the Import Workload functionality.

If you have the Collector XLSX file please import the individual project XLSX files in Sizer one by one within the same Sizer scenario.

You can get the XLSX from the Collector portal using the “Export to XLSX” functionality.

Is there any document explaining various options while exporting data to Sizer?

Yes, there is a detailed help page documenting various options available when exporting to Sizer, guidance, and caveats around the same. Please here the same here – Exporting Collector Data to Sizer

How can I view the Sizings created via Collector Portal in Sizer using Salesforce Login?

We plan to merge Sizer & Collector Portal in the future. But for now, there are a couple of workarounds to access the Sizings created via Collector Portal in Sizer when using Salesforce login:

1) If you know the scenario number, just open any scenario and replace the scenario number with the one you want to view.

2) If you don’t remember the scenario number, you the search functionality in Sizer. Search functionality is located on the left of your username (that can be seen at the top left corner). Navigate to the “Advanced” tab in the search dialog and use “Scenario Owner” with the value ‘Created by me’ and you should be able to view the scenario created via Collector Portal.

CPU FAQs

Are the existing environment CPUs considered during sizing when we export Collector output to Sizer?

Yes, when you either export Collector data to Sizer or import Collector data from Sizer, the input CPUs are considered.

Storage FAQs

Does Collector report raw storage or usable storage?

Collector reports both raw storage and usable storage. At the cluster level, the storage represents raw cluster storage. Usable storage can indirectly be calculated using the vDisk tab of the XLSX export. There are a couple of caveats around the storage metrics, please refer to other questions under this section.

Does the Collector capture snapshot information?

As of today, Collector gathers storage consumed by snapshots when we take the vCenter/ESXi route. But this data is missing in data gathered via Prism/AHV or Hyper-V. Very soon, we plan to enhance Prism/AHV to gather storage metrics around snapshot.

Does Collector report Datastores, RDM, or iSCSI disks?

Unfortunately, as of today, Collector does not report RDM or iSCSI disks. But we do have this in our roadmap.

Security FAQs

How can I convince my customer or prospect that Collector is safe to the user?

Nutanix does not compromise on the security of our customers, partners, and prospects. We have comprehensive security measures in place and the same is made available in the security guide available here. Additionally, the security guide is bundled along with Nutanix Collector bits.

Is there an option to mask sensitive information gathered by the Collector?

Yes, with the launch of Collector 4.0, users do have an option to mask information that might be considered sensitive. This option is available while exporting the data to .XLSX format. We will soon be enabling masking of data even in the Collection file (.zip file).

Hyper-V FAQs

How can we initiate performance data collection in the case of the Hyper-V cluster?

There is a detailed help page on Collector support for Hyper-V, please refer to Collector 3.3 page

Can we gather data of more than 1 cluster at a time?

At this moment, in the case of Hyper-V, Collector can gather data from only one cluster at a time.

Does the collector support a standalone Windows server?

Not yet, it is on the roadmap.

AHV FAQs

Why can’t I see the Guest OS details in case of data gathered via Prism/AHV?

For Collector to gather Guest OS details, NGT needs to be installed and enabled on the guest OS. Collector 3.5.1 is enhanced to pick up guest OS details if the pre-requisites are met.

vCenter/ESX FAQs

Can Collector gather data from standalone ESXi hosts that are not managed by vCenter?

As of today, Collector gathers data from ESXi hosts is via vCenter APIs and hence we can’t pull data from a standalone ESXi host.

ONTAP FAQs

How can I export Collector output from the ONTAP system to Sizer?

We are currently working on supporting the export of ONTAP output to Sizer. For now, you will have to manually analyze the Collector output and feed it to Sizer. ETA: mid-Jan 2022

Misc FAQs

How can I identify the Hypervisor in use at the end-user site using Collector output?

The “vDatacenter” sheet within the XLSX can be used to identify the Hypervisor in use. The ‘MOID’ column within the “vDatacenter” maps to the Hypervisor as shown below:

| MOID |

Hypervisor |

| Prism Element |

AHV |

| Prism Central |

AHV |

| Hyper-V |

Hyper-V |

| Anything starting with “Datacenter” |

ESXi |

In the future, we do plan to add an additional column to call out the Hypervisor in use which would make this information self-explanatory and also display the same information UI.

What is the frequency at which performance data is gathered by Collector?

The granularity of performance data collection differs based on the hypervisor in use.

Performance data on both, ESXi & AHV, are gathered every 30 minutes. Most metrics are gathered over a period of 7 days.

In the case of Hyper-V, the frequency of performance data collection depends on the duration of data collection initiated. The below table provides a granularity of the data collected:

| Duration of Performance Collection |

Frequency of Data Collection |

Data points over the duration |

| 1 day |

5 minutes |

288 |

| 3 days |

10 minutes |

432 |

| 5 days |

20 minutes |

360 |

| 7 days |

30 minutes |

336 |

Does Collector gather IOPS & throughput-related information?

Yes, Collector gathers IOPS at cluster level and the throughput – disk usage and network usage at both cluster level as well as host level. The same can also be viewed in the XLSX export of Collector data. In the case of Collector Portal, the VM List tab in the performance view displays the same as well.

How is the VM Provisioning Status calculated?

The VM Provisioning Status is calculated based on the 95th percentile CPU utilization values. The categorization details can be seen in the tooltip and the same is mentioned below:

Normal: CPU utilization values is between 60% and 80%

Under-provisioned: CPU utilization > 80%

Over-provisioned: CPU utilization < 60%

Unknown: Utilization value is missing

Can I customize the VM Provisioning Status?

Yes, you can customize the VM Provisioning Status criteria using the “Manage” option next to VM Provisioning Status tooltip.

Can I edit the project name in Collector?

Yes, you can edit the project name. Please refer to the “My Projects” section within the Collector Portal User Guide available here

Is there a way to bulk edit the records under the VM List tab?

Collector Portal now allows you to edit multiple VMs in one go via the “Bulk Change” button. Use cases where bulk edits would be of great value:

Sizing a set of VMs in one cluster and the rest in another cluster. For example, all DB VMs “Target Cluster” values can be changed to a new cluster, say DB Cluster instead of default Cluster 1. The rest of the VMs can be left to default, Cluster 1, or further targeted towards specific clusters before exporting to Sizer.

Edit the resources allocated to the set of VMs. For example, all knowledge workers are not allocated enough vCPUs, increase all of them at once via bulk edit.

Is there a way to export the graphs from the Collector portal?

Unfortunately, not yet but we do plan to export to PDF or PPT in the future.

I am having issues with uploading the collection file to Collector Portal, what could be wrong?

If you happen to see the below error message:

Invalid input file. Collection Zip file is expected.

One of the possible reasons could be that the original zip file was extracted and zipped once again. Using the original zip file should resolve the issue. If the issue still persists please report the issue.

Where can I find the Collector support matrix?

Please refer to the “Nutanix Collector Compatibility Matrix” section under Collector User Guide available here

Who should I contact in case of any other queries?

Please reach out to us via collector@nutanix.com or reach out to us via slack channel – #collector

Alternatively, our partners, customers, and prospects can also reach out to us via Nutanix Community Page – Sizer Configuration Estimator

Report issues in Nutanix Collector – here

And you can always reach the Product Management @ arun.vijapur@nutanix.com

You have reached the end 🙂 Do let us know if you found this page useful or how we can make this better. Please feel free to share this page with your peers, partners, customers, and even prospects.

Thank you,

Team Nutanix Collector