RVtools is a leading tool to monitor VMware clusters. It is an free tool, that runs on Windows (.Net 4 application), can query VMWare’s VI (Virtual Infrastructure) API to get numerous attributes. The VI API is exposed as a Web service, running on both ESX Server and VirtualCenter Server systems.

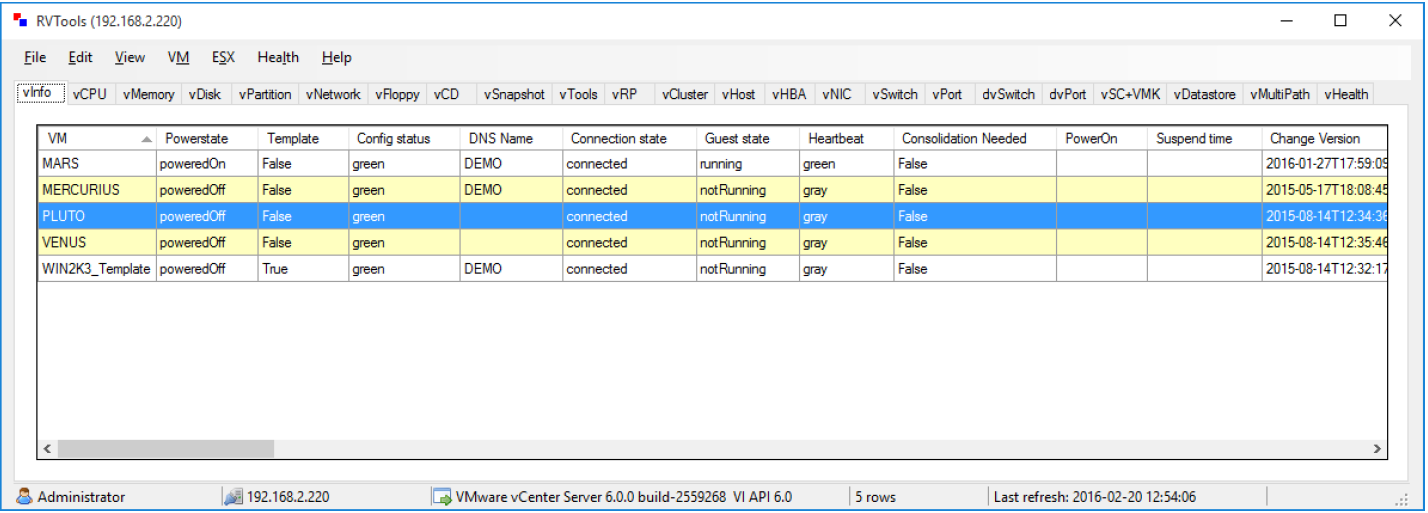

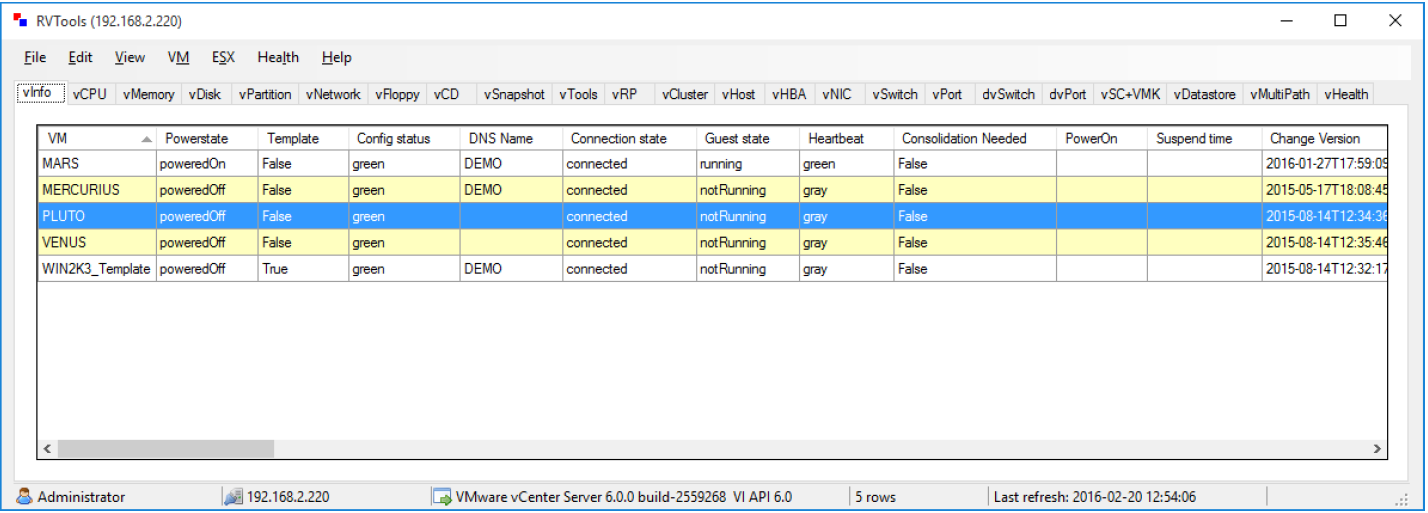

As you can see from the screenshot below, the tool has a number of tabs capturing the details in tabular format.

- The NTNX SE or Partner SE to install the supported version of RVtools from Robware on their laptop. Alternatively, the customer often has it installed and gives the SE the final excel file.

-

- If SE runs it on their laptop, they would need permission and cooperation with the customer to have it point to VCenter or ESX. User/password is needed and must not be blocked by a firewall. This can be a big issue and so could be best to have the customer running RVtools.

-

- If customer gives final excel output, SE still needs customer to agree that configuration can be shared.

-

- The command line to run the tool and create the excel output is included in this document.

- Once the SE has the Excel output, they will import it into Sizer which will group most of the VMs into one of 25 profiles.

- Sizer will then return an excel with a Sizer_summary tab which has key information but also what profile is applied or “not covered”

- The user should review this with the customer and especially those not covered by a profile. They are very high in either CPU or RAM or Total capacity. Do they need to be that high? If so the user would create custom workload.

No. Sizer corresponds to only those VMs for which the ‘covered’ column in RVTool summary report is ‘Yes’.

There are couple of parameters based on which some VMs might not be covered and Sizer does not size for these VMs.

- A customer may have many VMs and often some are defined but turned off are actually dormant. Automatically sizing all VMs could cause Sizer to oversize the environment. Conversely, some of the VMs that are powered down may be applications that are used occasionally and need to be sized.

- We use the powerstate flag. SE should discuss with customer. If they feel it will be migrated but was off when the tool was run they should change flag to poweredOn.

- Conversely changing that flag to poweredOff will tell Sizer to not size it

- From vParition sheet in RV tools , we read two columns – Capacity MB and Consumed MB .

- Calculate total of Capactity MB and total Consumed MB for any VM as a VM can have multiple partitions.

- If the VM is thick provisioned, we consider Capacity MB, if thin provisioned, Consumed MB is used for calculation.

- Then we do Groupings of VM based on CPU and RAM (example Small CPU and Small RAM).

- Storage capacity requirement for the group (Small CPU and Small RAM) is calculated as the average of the total capacity of the VMs that are part of the group

- Use Capacity MB for thick provisoned VM and Consumed MB for thin provisioned VM for the calculations.

- Sizer will try to group the VMs into one of 25 workload profiles. If the VM has simply higher capacity, CPU, RAM requirements than any of the profiles, then Sizer will flag that for user review. The user can either assume it was over-provisioned and go with one of the profiles or create a custom workload for that larger VM.

How are the usable capacities determined for VMs?

From Vparition sheet in RV tools , we read two columns – Capacity MB and Consumed MB.

Finding capacity for Sizing:

- If Thin = True, then we use Consumed for Final Capacity

- If Thin =False, then we go with Capacity for Final Capacity

- (Note: Thin variable comes from vDisk tab)

- We group VMs into categories such as Small CPU, Small RAM

- We create a list of VMs for those that are Thin and those that are thick provisioned. So we can have Small CPU and Small RAM that are thin provisioned and those that are thick provisioned.

- In each group in each list we then go with the max cap value of all those VMs and apply 90% HDD and 10% SSD

What are the prerequisites for running the RVTool?

Its a windows application running on a desktop or laptop with network connectivity to the cluster that is being analyzed. It would need vCenter userid and password to connect to the cluster.

How Sizer creates workload using RVTools data ?

- User will import the RVtools excel into Sizer. There is a upload file button on Import Workloads page.

- Sizer will pull all the info for each poweredOn VM

- Sizer will see if the VM fits one of the 25 profiles. It will try to fit the VM in the smallest one that still fits.

- Sizer will then create an excel sheet with the sizer_summary tab. It has all the info Sizer grabbed info and state the profile that was selected or “not covered”

What does CPUs column mean in the RVTool sumary sheet ?

The values for the CPUs column in the Sizer summary sheet indicate the CPU profile for the VM.

As explained above, every VM is classified as small, medium , large etc as per the vCPU count of the VM as below:

VCPU

- Small = 1

- Medium = 2

- Large = 4

- X-Large = 8

- XX-Large = 16

On what basis is the VM vCPU and vMemory classified ?

Below is the classification criteria for VM vCPU and memory

VCPU

- Small <= 1

- Medium <= 2

- Large <= 4

- X-Large <= 8

- XX-Large <= 16

RAM

- Small = <1.024GB

- Medium <2.048 GB

- Large <8.2 GB

- X-Large <16GB

- XX-Large <32 GB

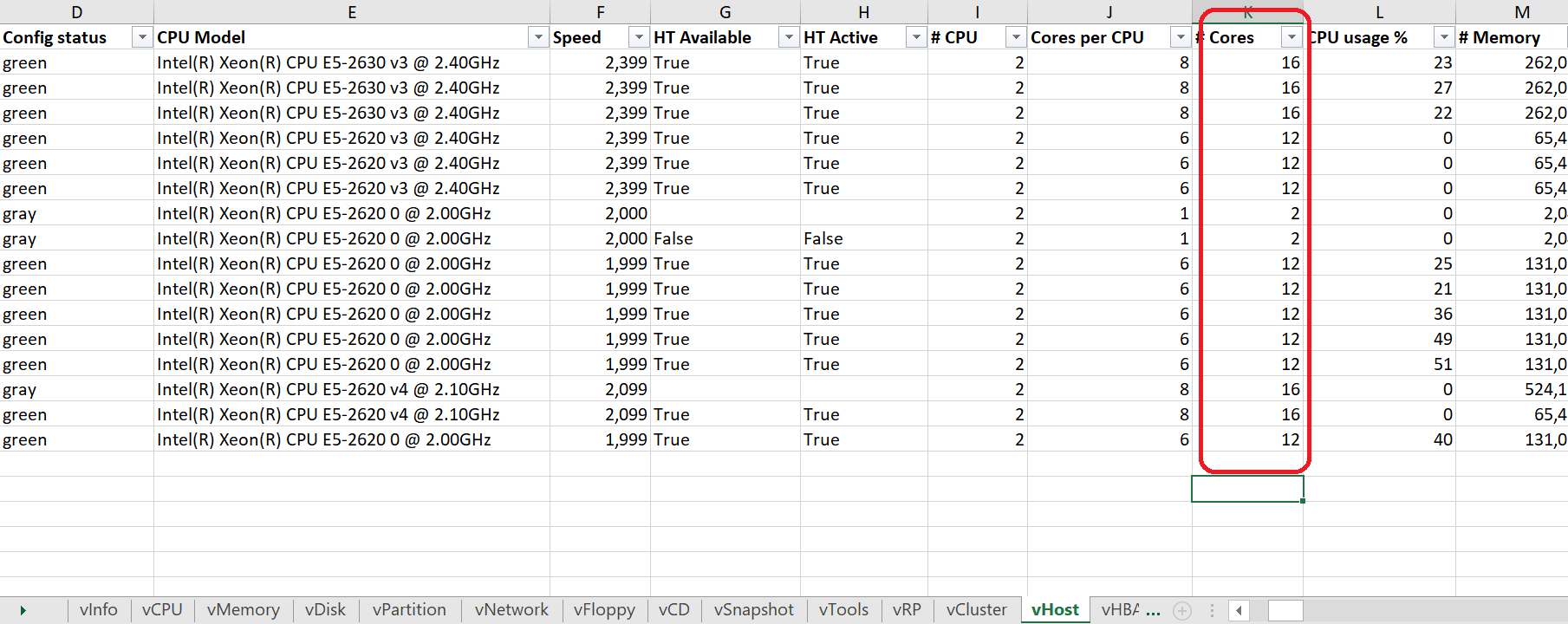

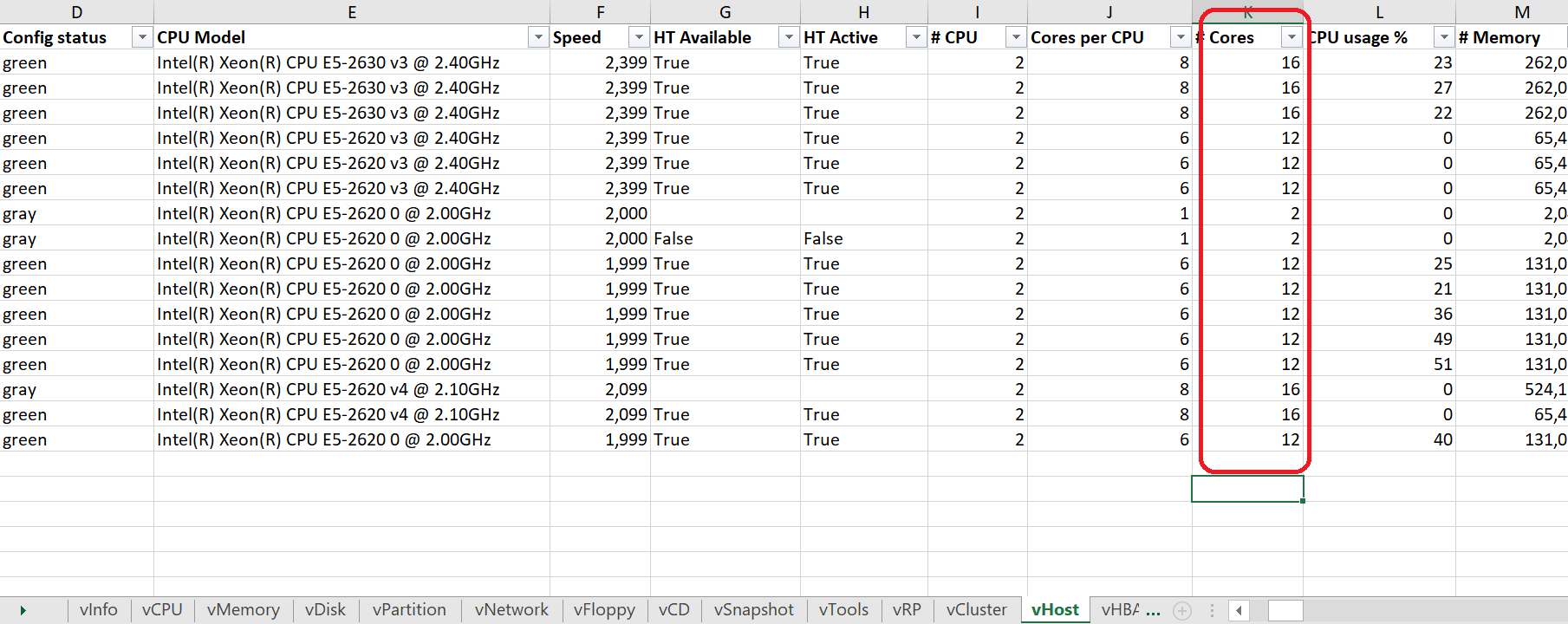

What does Cores column mean in the RVTool sumary sheet ?

These indicate the total number of cores available in the host machine of the VM. These are collected in the vHost tab of the RVTool export.

What happens to the VMs which are not covered as it does not fit in one of the 25 server virtualization profiles?

Analysis has shown about 85% of the VMs fit in these 15 server virtualization profiles. For a handful of VMs that does ont fit in the profile, SEs can review the VMs with the customer. Often some VMs are over provisioned and would fit in one of the profiles.

These changes need to be made manually to the RVTool excel and imported to Sizer for a revised sizing summary.

How do I handle errors associated with importing RV tools?

Make sure you used the latest version of RVTools and that the output has not been tempered with. Also, make sure you have read/write rights on the file before uploading.